Creating a backup solution with S3 and cron

August 05, 2020

It’s important to always have a backup of your data. In general, you should view your computer as a tool (replacable), but your data as indispensable (not replacable). I had a boot failure recently that prompted me to make a basic backup solution using S3.

Configure a centralized synced folder

First, I centralized all my data into a single folder that would be backed up. I kept this folder on my Desktop for easy visibility.

I also removed the default Deskop/Documents/Videos links in the Ubuntu file manager. I did this so I do not accidentally store files in any other locations. It was pretty easy to do so, I just followed this guide which removed those folders from Nautilus. You can optionally delete these folders from your root directory.

Create S3 bucket and Configure IAM User

Next, we need to create an S3 bucket, an IAM user (and download its credentials to programmatically interact with S3), and install the aws cli. Sign up for an AWS account if you do not have one.

Follow this guide to learn how to create an IAM User and an S3 bucket. Although not needed, more information on IAM users in general is found here.

Install the AWS SDK

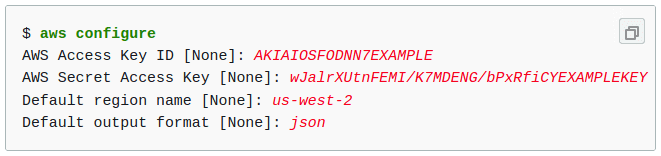

Run aws configure to set up the credentials. Choose the correct region you are creating AWS resources in.

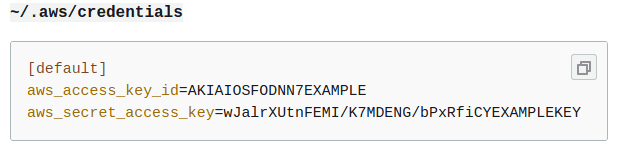

(or open the ~/.aws/credentials and manually add the credentials)

Create Syncing Script

Next, I created a script to sync my local folder with my S3 bucket. The script is as below (folder paths redacted):

aws s3 sync ${LOCAL_FOLDER_PATH} s3://${S3_PATH} \--exclude "*/node_modules" \--exclude "*/build/" \--exclude "*/target" \--exclude "*.classpath" \--exclude "*.factorypath" \--exclude "*.settings" \--exclude "*.iml" \--exclude "*.project" \--no-follow-symlinks \--sse \--delete

The script syncs the local folder with the s3 path, excluding some files that should not be uploaded (node_modules, target, etc..).

- The

--no-follow-symlinksflag is set to ignore possible infinite recursion with symlinks. --sseis set to enable encryption on the files, by default it usesAES-256encryption when not specified (you can provide your own keys with AWS KMS).--deleteis set to delete any files in the s3 bucket that are not present in the local folder, essentially this means the folders are kept in sync as opposed to the s3 folder only adding new files and not deleting old files.

Schedule the script with cron

Lastly, I configured the script to be run everyday at 6pm.

To view your current user cron configuration, use crontab -l

crontab -l

Edit the crontab to run your script whenever you desire,

crontab -e

Sample of edited crontab to run sync script at 6pm everyday

SHELL=/bin/bashPATH=/bin:/sbin:/usr/bin:/usr/sbin:/usr/local/bin:/usr/local/sbin# For details see man 4 crontabs# Example of job definition:# .---------------- minute (0 - 59)# | .------------- hour (0 - 23)# | | .---------- day of month (1 - 31)# | | | .------- month (1 - 12) OR jan,feb,mar,apr ...# | | | | .---- day of week (0 - 6) (Sunday=0 or 7) OR sun,mon,tue,wed,thu,fri,sat# | | | | |# * * * * * user-name command to be executed# Run S3 sync at 6pm everyday0 18 * * * /sync-folder-to-s3.sh# Edit this file to introduce tasks to be run by cron.

Things to note when using crontab